How to make a Self Driving Robot

Building a robot requires knowledge of both hardware and software and if we want that robot to navigate intelligently we also need to apply machine learning and computer vision techniques. Robots have been in development for long time (History of Robots), one of the most well know autonomous robot is Roomba first launched in 2002 by iRobot. In the last few years there has been lot of development in the area of delivery robots by companies like Starship Technologies, Amazon Scout, Fetch Robotics, Robby Technologies, Marble, Postmates Serve, FedEx Roxo (based on DEKA iBot), Otto Motors, Refraction AI and Omron. There are also several indoor robots for home, restaurants, hotels and hospitals etc like Savioke, Bear robotics and Samsung Ballie. Some of these robots have been tested by companies like Dominos, Fedex etc. Here I am only including smaller robots which use the sidewalks and move at slow speeds, I am not including bigger delivery cars and self-driving cars in this post. Here I will cover how to build a self-driving delivery robot.

Fun Projects

Donkey Car is one of my favorite open source projects which started in 2016 much before other similar cars like AWS DeepRacer etc. It has a very active and friendly Slack community and races are held all over the world. Its also the lowest cost option with all the parts costing around $250 and takes 2 hours to assemble. If we add more sensors or a bigger battery then the cost can be much higher. It uses a Raspberry Pi as the main processor (Jetson Nano can also be used). Additional accelerators like Coral Edgte TPU USB can be added and various other sensors (Radar, IMU etc) can also be added. In the basic version only one camera is used to race on a track after some training, there is no radar or lidar or IMU unit.

AWS DeepRacer was launched by Amazon in 2019 for $399 as tool for developers to get started with reinforcement learning. The Deep racer simulator allows us to train models in a simulated environment and one can compete in the DeepRacer league. AWS Robomaker is AWS cloud service for the development and deployment of robotics at Scale. It supports ROS and other tools to make development easier.

DonkeyCar is a DIY project for people who want to experiment with both hardware and software, whereas AWS DeepRacer is for people who only want to focus on software and want a nice looking car. Another similar project is F1Tenth. Another project is Upbot. Turtlebot is another low cost robot used for research and education.

The above are fun projects and can be use to develop some software and run simulations, however they have different limitations and to develop our own robot we need to look at what all components are required.

Hardware and Specs

Lets choose the below specifications as our starting point →

Speed → 5 Km/h (3.1 mph — close to pedestrian speed)

Weight Carrying Capacity → 5 Kg (11 pounds)

Connectivity → GPS, Mobile (3G/4G/5G), Wifi, Bluetooth

Design Trade-offs → We would want to minimize the total cost, have the maximum range (or battery life possible) and have the best user experience!

Sensors — RGB camera, Depth camera, Radar

The Brain — Compute

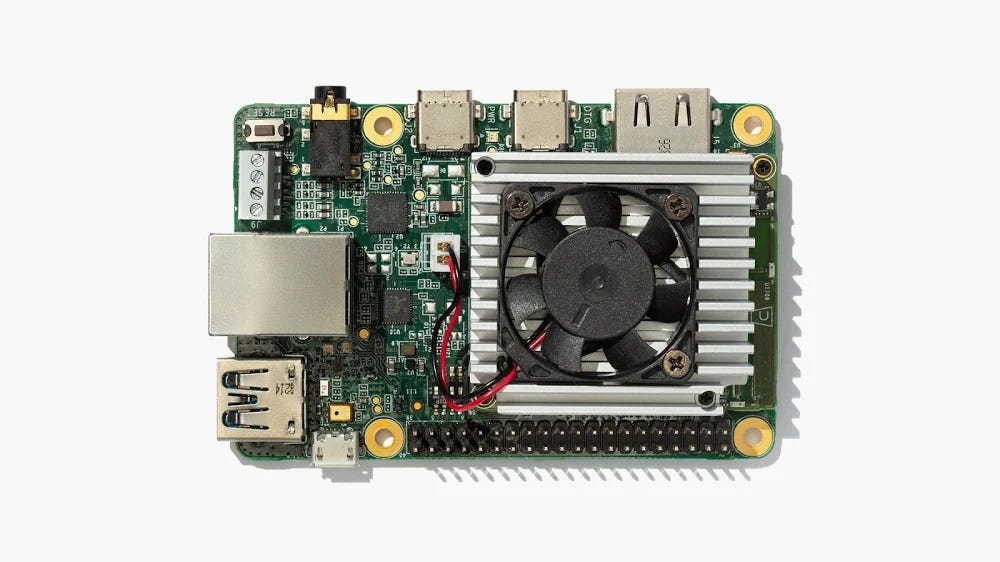

Raspberry Pi 4 is the latest version of RPi and can be used as the main brain of the robot. As it can run Ubuntu, it supports ROS (Robot Operating System) and Pytorch/Tensorflow. We can also add additional compute by connecting a Edge TPU USB Accelerator or a Movidius USB stick. To learn more about NVidia Jetson Nano and Coral Edge TPU Dev board check the below post —

We can also make a custom board which has more that one Edge TPU by using the MCM module or the PCIe Module. The smaller and less power hungry would be suitable for personal robots like roomba, ballie etc which don’t carry payload, move in relatively more controlled environment (house, restaurant etc compared to city sidewalks) and also have smaller distance to travel.

More Compute

The compute power on boards like RPi4, Coral Edge and Jetson Nano might not be sufficient for all the computer vision tasks involved in a Self driving robot. The boards with more compute are Nvidia Jetson boards like Jetson TX2 , Jetson Xavier NX, Jetson Orin. The Xavier NX system is much more efficient and powerful than Jetson TX2, Xavier NX can perform 10 TOPS at 10 W. Jetson Orin NX can deliver up to 100 TOPS with less than 25W power consumption.

Several of the current delivery robots use the Jetson TX1 or TX2, I will go with Jetson Orin NX 8GB as its the most powerful and cost is also not too high at $399. One can also build a custom deep learning accelerator →

Battery — The size of the battery will determine how much distance the robot will be able to travel before the battery has to be recharged.

Motor and Motor Controllers— For battery powered robots typically brushless DC motors are used. Motor controller is the board which controls the supply of current to the Motor. For more details read this App note from TI.

Connectivity — The fastest solution will be to use module which includes GPS, mobile connectivity, Wifi and bluetooth. There are several Wifi and bluetooth in one modules (eg. ESP32 , EMW3239) and GPS + 3G modules.

Sensors

Sensors in a robot are analogous to the human senses like vision, hearing, touch etc. Camera, lidar etc can be used for vision, microphones can be used for hearing and pressure sensors (like capacitive touch sensor in phone) for touch.

Camera — Camera modules (like RPi Camera etc) with different resolution sensors are easily available.

Radar

Lidar and Depth Camera — Lidars from companies like Velodyne etc can be quite expensive, to keep cost low we can only rely on cameras and radars and use techniques like VSLAM.

Intel Depth Camera — Intel provide several different depth cameras based on different sensing technologies (LIDAR, Active IR Stereo, Coded light).

Intel RealSense Tracking Camera T265 → This device performs SLAM (Simultaneous localization and Mapping) on the devices (using a Movidius Myriad X VPU chip). It has two Fisheye lenses with combined 163±5° FOV. It can be used with the Intel D400 series depth cameras.

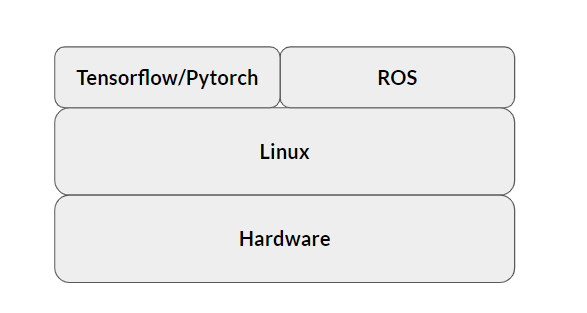

Software

ROS — Robot Operating System (ROS) is an open source project used in a large number of commercial and research robots. Its not an actual operating system, it runs on top of Linux (Ubuntu, Debian etc).

Gazebo is an open source tool for simulation of indoor and outdoor robots. It has a physics engine and works with ROS. I has model editor to build your robot, building editor to build model of your indoor environment and then create a world which include both the robot and the building model. Gazebo has several tutorials on how to build a world and robot etc.

Carla — is another open source simulator more focused on self-driving cars.

NVIDIA Issac SDK — also provides several building blocks for development of robots.

Vision Tasks

Visual SLAM (Simultaneous localization and Mapping) is the process of constructing or updating the map of an environment while keeping track of the position of the robot in this environment (localization) and only using camera as sensors (no lidar or radar etc).

Object Detection

Open3D — is an open source C++ library for fast manipulation of 3D data.

Detectron2 —Pytorch based library with object detection models like Mask R-CNN etc.

Yolov5 — fast object detection model, can be exported to ONNX to run on RPi4, Jetson Nano etc

PyRobot — is an open source python package for robotics research from facebook for manipulation and navigation.

Kaolin — Pytorch based library for 3D Deep learning

Kalman filter, Particle filter, A* algorithm

Learning Resources

There a lot of free resources to learn, I will only list some, before taking any paid course I would try the free resources —

In the coming years we will see lot more robots being developed and moving around us performing tasks and even talking to us! To learn more about Robotics and hardware you can follow me on twitter — https://twitter.com/MSuryavansh